What is a container?

ContainersContainers are blowing up. Everyone is talking about Docker, Kubernetes, and containerization. In this blog post I'm going to dive into what a container is, why they've become so popular, and expose some misconceptions.

Let's start by unpacking real world shipping containers. To a shipper, a container is a box they can put their stuff in and send it around the world with little worry. A shipping container can hold a bunch of stuffed toys tossed in any which way or expensive engineering equipment held in place by specific contraptions. These containers can hold most things however an individual needs them to be held. This is by design.

Shipping containers are built to precise standards so they can be stacked, fit on trains, trucks, and lifted through the air. It doesn't matter the manufacturer, the container's specification will work with all of these systems.

A Linux container is like a shipping container. It has a standardized outside that can clip into many systems like Docker and Kubernetes, but still holds anything the creator wants inside (like NodeJS or Tomcat).

But what is a container?

Containers are just fancy Linux processes -Scott McCarty

There is no container object in Linux, it's just not a thing. A container is a process that is contained using namespaces and Cgroups. There is also the SELinux kernel module which provides the ability to give and restrict fine grain control for security purposes.

Namespaces give a container that contained aspect. That is to say, when operating inside of a container this is how it appears to be root with full access to everything. Namespaces provide access to kernel level systems like

- PIDs

- timesharing

- mounts

- network interfaces

- user ids

- interprocess communication

A namespace paints a picture of what a process sees on the host machine, which may be a very limited view.

Try this - Namespaces

Start a container that has a default namespace provided by Docker:

# press q to exit

docker run -it --rm busybox top

In the above example we looked at the processes running in the busybox container which is just one (top) as PID 1. PID 1 is special because:

- all other processes are killed (with the KILL signal)

- a process with children processes dies, the children processes new parent is PID 1

- There is no default action for PID 1

The key point is that the namespace boxed this process which hid all other processes from it. We can set the namespace to be shared with the host with the --pid parameter, running:

docker run -it --rm --pid host busybox top

will show all of the host machines processes, as well as top running in the container. Note that the top process is not PID 1 anymore.

Docker allows you to join namespaces with the host and other containers which can be useful for monitoring processes.

Cgroups control which resources and how much of each resource a container has access to, like memory and cpu. Docker will create a control group that restricts the container from over-utilizing resources. This protects the host machine from dedicating all it's resources to a process like a memory leak. This cgroup can be investigated at /sys/fs/cgroup/memory/docker/<container hash>.

Try this - Cgroups

- Start a docker container hello-world webserver

docker run --rm -it -p 80:80 strm/helloworld-http - Use

docker psto get the container hash:

CONTAINER ID IMAGE

970cafba8013 strm/helloworld-http

- Navigate to the cgroup for this container

cd /sys/fs/cgroup/memory/docker/970cafba8013<TAB>. Don't forget to tab to complete for the entire hash! - See how much memory it's been allocated:

cat memory.limit_in_bytes(for me it's:9223372036854771712)

Since a container is just a handful of kernel structures combined to build a containerized process, it stands to reason that the definition of a container will vary from runtime to runtime. Runtimes will be explored in more detail in a future post.

-- Where does Docker play into this?

You might be wondering why people are so fired up about containers now. Prior to Docker, containerized processes were not only difficult to make, but also difficult to ensure all the necessary dependencies were included. Docker's role in the popularization of containers was it's simple toolset. The Docker toolset can build, run, monitor containers, and much more.

Docker uses images as a reusable template for creating containers. Images include everything that is needed to create a completely reprodudcible containerized process. Containers are also layered, which allows you to share different depedencies as a bottom-up layer. Building an image is easy with dockerfiles - a file that allows you to start with another image as your base or from scratch. Here's an example dockerfile:

FROM strm/helloworld-http # our base starting image

RUN mkdir /new-www

WORKDIR /new-www

RUN echo "Hello Docker layer tests" > index.html

ENTRYPOINT python -m SimpleHTTPServer 80

Each line in the above dockerfile is a layer. These layers are hierarchical from top to bottom. If a parent changes, all of the descendants must be rebuilt but the ascendants stay the same. This means you can strategically build your images with the layers that change most frequently at the bottom of the hierarchy.

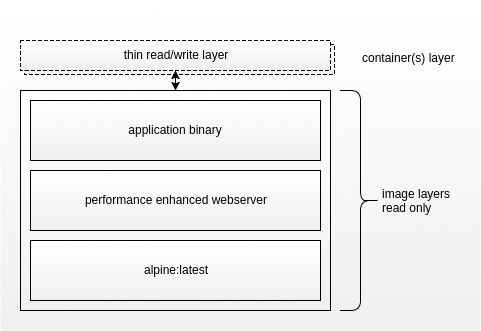

For example, a web app container is composed of an image with three layers. The first layer is alpine linux, a tiny Linux distribution. The infrastructure team loads a second layer composed of a performance-enhanced webserver onto their base alpine layer. The third layer is owned by the application team who copy their binary and dependencies to the image.

The layering system is significant for two reasons. First, they are reusable, so the webserver layer in the example above can be used by many teams in that company. Second, when building an image you only have to start from the latest change. If the first or second layer have not changed you don't need to go and get the latest version, which is advantageous for shipping applications. A base image can have all the dependencies that don't change often and the next layer can have the release included for fast build and distribution.

Docker combined all the necessary tools you need to run, build, troubleshoot, and share images containing applications with complex dependencies. Alongside this little package, complete and easy to consume documentation make it easy to ramp up without having to understand the complex details of Linux processes.

There is also something to be said for timing. LXC, another Linux container system was released 5 years before Docker. At that time traditional virtual machines were mainstream and the DevOps culture shift had yet gain massive momemtum that it would in the years to come. When the ecosystem was just right, Docker was fuel that added to the fire to create contained systems. Systems that could stop and start on a dime with a fraction of the resource utilization of virtual machines.

In summary, we've learned that containers are fancy Linux processes where the normal system surroundings are hidden away. We've seen demonstrations of how namespaces can hide or reveal system resources and how cgroups protect those resources. A "container" is just a combination of Linux data structures and the actual definition is loosely defined.